|

I am an MS student in Robotics at the Robotics Institute, Carnegie Mellon University , advised by Prof.Srinivasa Narasimhan. I collaborate closely with Prof. Sebastian Scherer and Prof. Laszlo Jeni and am a member of CMU’s Team Chiron, competing in the DARPA Triage Challenge. My research lies at the intersection of computer vision and robotics, focusing on developing autonomous systems for human analysis. Before coming to Pittsburgh, I worked as a research assistant at the Indian Institute of Science, Bangalore, under the guidance of Prof. Venkatesh Babu. My work focused on improving the learning algorithms for deep neural networks by making them more generalizable on real-world datasets with class-imbalance. Previously, I finished my undergraduate in computer science at the University of Petroleum and Energy Studies, Dehradun. I have also had the privilege to work under the direction of Dr. Tanupriya Choudhury and Dr. Tanmay Sarkar on various research projects focusing on the applications of computer vision. Email | Github | Medium | Google Scholar | |

|

|

|

|

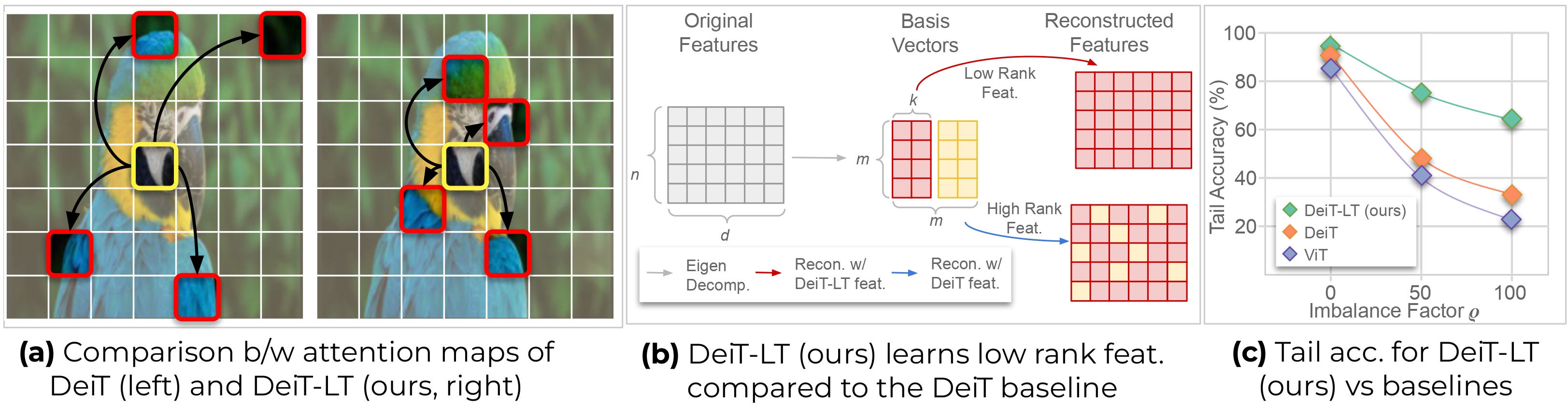

Harsh Rangwani*, Pradipto Mondal*, Mayank Mishra* , Ashish Ramayee Asokan, & R. Venkatesh Babu * denotes equal contribution Conference on Computer Vision and Pattern Recognition (CVPR 2024) Introduced a distillation strategy to train a Vision Transformer from scratch on class imbalanced datasets. |

|

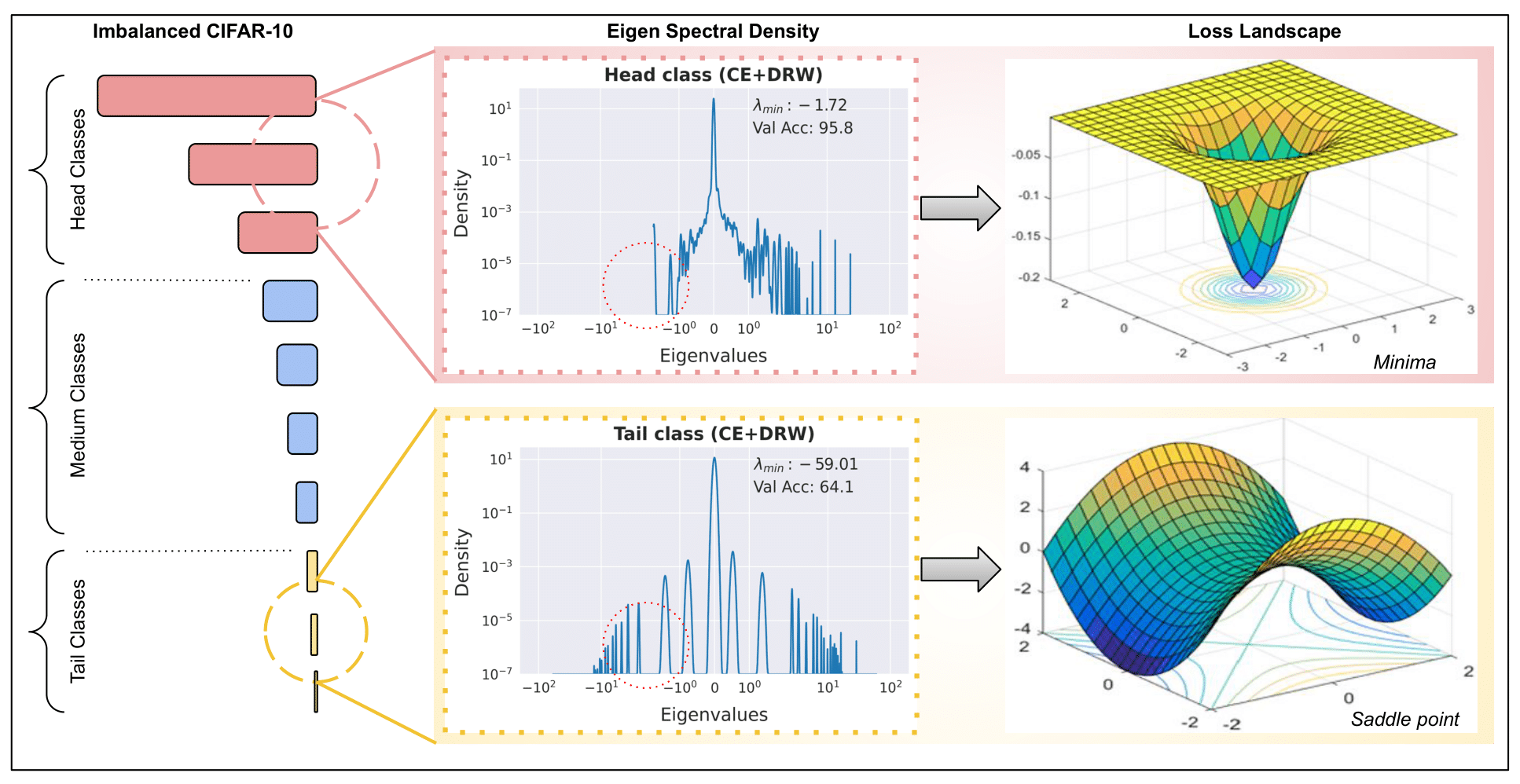

Harsh Rangwani, Sumukh K Aithal, Mayank Mishra ,& R. Venkatesh Babu Neural Information Processing Systems (NeurIPS'2022) Examined the spectral density of Hessian of class wise loss to show network weights converges to a saddle point in the loss landscape of minority classes. Furthermore, showed that methods that encourage convergence to flatter minima can be used to escape saddle points for minority classes. |

|

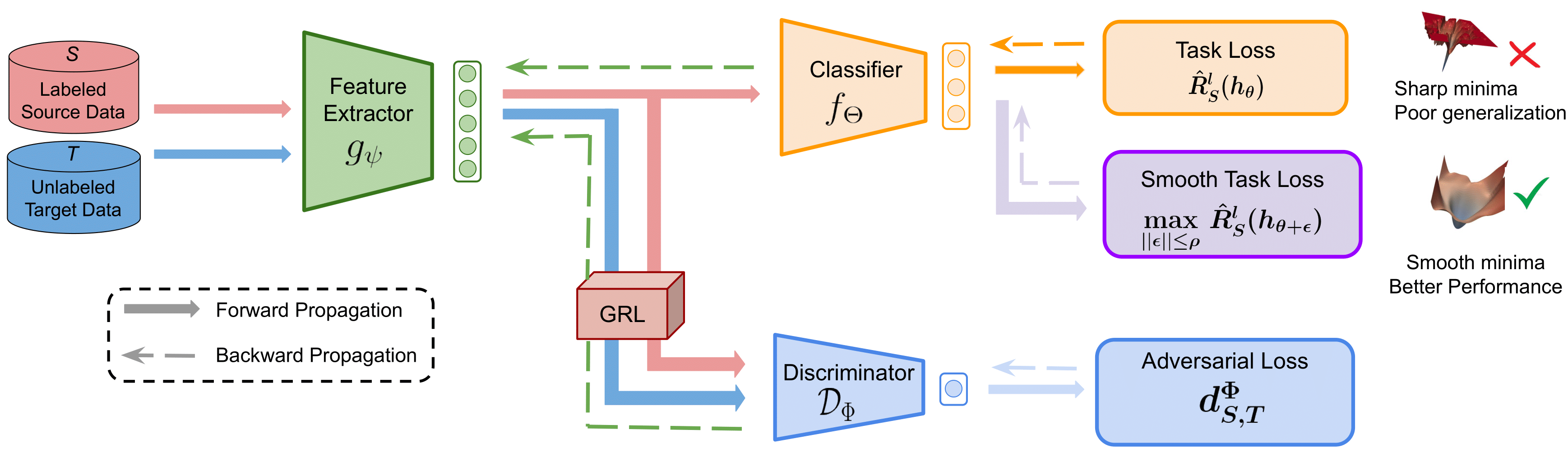

Harsh Rangwani, Sumukh K Aithal, Mayank Mishra , Arihant Jain , & R. Venkatesh Babu International Conference on Machine Learning (ICML'2022) Analysed the effect of smoothness of loss landscape in domain adversarial training and achieved SOTA results for domain adaptation on Office-Home and VisDA-2017 datasets. |

|

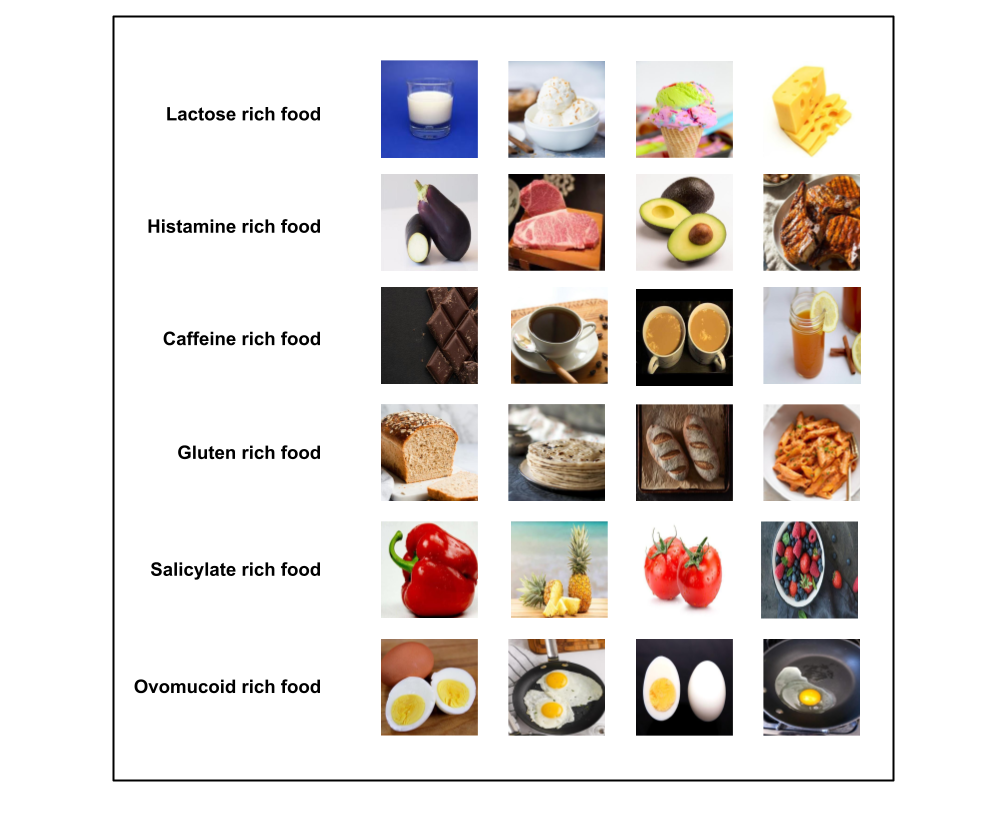

Mayank Mishra, Tanmay Sarkar, Tanupriya Choudhury, Nikunj Bansal, Slim Smaoui, Maksim Rebezov, Mohammad Ali Shariati&, Jose Manuel Lorenzo Journal: Food Analytical Methods (SCI, IF 3.5, Springer) Introducing Allergen30, a custom-made dataset with 6,000+ images of 30 commonly used food items that can trigger an allergic reaction within the human body. This work is one of the first research attempts to train a deep learning based object detection model to detect the presence of such food items from images. |

|

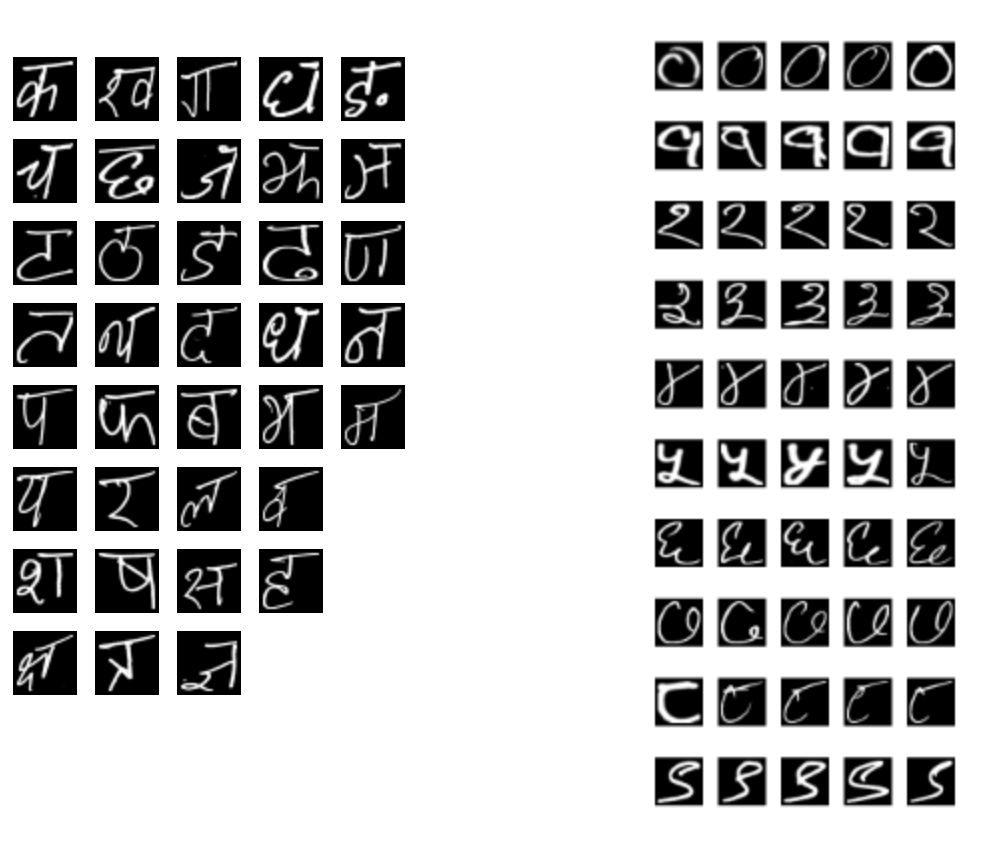

Mayank Mishra, Tanupriya Choudhury, & Tanmay Sarkar, IEEE India Council International Sub-Sections Conference (INDISCON 21) Built a devanagari (Indic) script handwritten character classifier using ResNet. The model achieved a SOTA accuracy of 99.72% on the Devanagari Handwritten Character Dataset. |

|

|

|

Mayank Mishra, Tanupriya Choudhury, & Tanmay Sarkar, The Patent Office Journal No. 17/2021 Dated 23/04/2021, Pg No. 20373 |

|

|

|

Article chosen for further distribution by Medium This article discusses the introductory topics of Computer Vision, namely image formation and representation. The image formation section briefly covers how an image is formed and the factors on which it depends. It also covers the pipeline of image sensing in a digital camera. The second half of the article discusses various ways of image representation and focuses on certain operations that can be performed on images. |

|

|

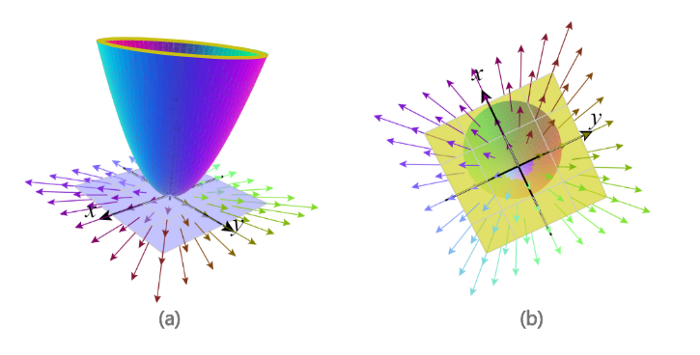

The article gives an easy to understand vector calculus insight that feature partial derivatives, gradient and directional derivatives to explain the reason of subtracting gradient in a gradient descent algorithm. |

|

| Visit here for more articles |

|

Template modified from here. |